Generative diffusion fashions like Steady Diffusion, Flux, and video fashions comparable to Hunyuan depend on information acquired throughout a single, resource-intensive coaching session utilizing a set dataset. Any ideas launched after this coaching – known as the information cut-off – are absent from the mannequin until supplemented via fine-tuning or exterior adaptation strategies like Low Rank Adaptation (LoRA).

It could subsequently be excellent if a generative system that outputs pictures or movies might attain out to on-line sources and convey them into the era course of as wanted. On this manner, as an example, a diffusion mannequin that is aware of nothing concerning the very newest Apple or Tesla launch might nonetheless produce pictures containing these new merchandise.

In regard to language fashions, most of us are aware of techniques comparable to Perplexity, Pocket book LM and ChatGPT-4o, that may incorporate novel exterior info in a Retrieval Augmented Technology (RAG) mannequin.

RAG processes make ChatGPT 4o’s responses extra related. Supply: https://chatgpt.com/

Nevertheless, that is an unusual facility with regards to producing pictures, and ChatGPT will confess its personal limitations on this regard:

ChatGPT 4o has made guess concerning the visualization of a model new watch launch, primarily based on the final line and on descriptions it has interpreted; but it surely can’t ‘absorb’ and combine new pictures right into a DALL-E-based era.

Incorporating externally retrieved information right into a generated picture is difficult as a result of the incoming picture should first be damaged down into tokens and embeddings, that are then mapped to the mannequin’s nearest educated area information of the topic.

Whereas this course of works successfully for post-training instruments like ControlNet, such manipulations stay largely superficial, basically funneling the retrieved picture via a rendering pipeline, however with out deeply integrating it into the mannequin’s inside illustration.

Consequently, the mannequin lacks the flexibility to generate novel views in the best way that neural rendering techniques like NeRF can, which assemble scenes with true spatial and structural understanding.

Mature Logic

An analogous limitation applies to RAG-based queries in Massive Language Fashions (LLMs), comparable to Perplexity. When a mannequin of this kind processes externally retrieved information, it features very like an grownup drawing on a lifetime of data to deduce possibilities a couple of matter.

Nevertheless, simply as an individual can’t retroactively combine new info into the cognitive framework that formed their basic worldview – when their biases and preconceptions had been nonetheless forming – an LLM can’t seamlessly merge new information into its pre-trained construction.

As an alternative, it could possibly solely ‘impact’ or juxtapose the brand new information towards its present internalized information, utilizing discovered rules to investigate and conjecture fairly than to synthesize on the foundational degree.

This short-fall in equivalency between juxtaposed and internalized era is more likely to be extra evident in a generated picture than in a language-based era: the deeper community connections and elevated creativity of ‘native’ (fairly than RAG-based) era has been established in numerous research.

Hidden Dangers of RAG-Succesful Picture Technology

Even when it had been technically possible to seamlessly combine retrieved web pictures into newly synthesized ones in a RAG-style method, safety-related limitations would current an extra problem.

Many datasets used for coaching generative fashions have been curated to reduce the presence of express, racist, or violent content material, amongst different delicate classes. Nevertheless, this course of is imperfect, and residual associations can persist. To mitigate this, techniques like DALL·E and Adobe Firefly depend on secondary filtering mechanisms that display screen each enter prompts and generated outputs for prohibited content material.

Consequently, a easy NSFW filter – one which primarily blocks overtly express content material – could be inadequate for evaluating the acceptability of retrieved RAG-based information. Such content material might nonetheless be offensive or dangerous in ways in which fall exterior the mannequin’s predefined moderation parameters, probably introducing materials that the AI lacks the contextual consciousness to correctly assess.

Discovery of a latest vulnerability within the CCP-produced DeepSeek, designed to suppress discussions of banned political content material, has highlighted how various enter pathways could be exploited to bypass a mannequin’s moral safeguards; arguably, this is applicable additionally to arbitrary novel information retrieved from the web, when it’s supposed to be included into a brand new picture era.

RAG for Picture Technology

Regardless of these challenges and thorny political points, quite a few tasks have emerged that try to make use of RAG-based strategies to include novel information into visible generations.

ReDi

The 2023 Retrieval-based Diffusion (ReDi) venture is a learning-free framework that quickens diffusion mannequin inference by retrieving comparable trajectories from a precomputed information base.

Values from a dataset could be ‘borrowed’ for a brand new era in ReDi. Supply: https://arxiv.org/pdf/2302.02285

Within the context of diffusion fashions, a trajectory is the step-by-step path that the mannequin takes to generate a picture from pure noise. Usually, this course of occurs progressively over many steps, with every step refining the picture somewhat extra.

ReDi speeds this up by skipping a bunch of these steps. As an alternative of calculating each single step, it retrieves an identical previous trajectory from a database and jumps forward to a later level within the course of. This reduces the variety of calculations wanted, making diffusion-based picture era a lot sooner, whereas nonetheless conserving the standard excessive.

ReDi doesn’t modify the diffusion mannequin’s weights, however as an alternative makes use of the information base to skip intermediate steps, thereby lowering the variety of perform estimations wanted for sampling.

In fact, this isn’t the identical as incorporating particular pictures at will right into a era request; but it surely does relate to comparable sorts of era.

Launched in 2022, the yr that latent diffusion fashions captured the general public creativeness, ReDi seems to be among the many earliest diffusion-based strategy to lean on a RAG methodology.

Although it must be talked about that in 2021 Fb Analysis launched Occasion-Conditioned GAN, which sought to situation GAN pictures on novel picture inputs, this sort of projection into the latent area is extraordinarily widespread within the literature, each for GANs and diffusion fashions; the problem is to make such a course of training-free and purposeful in real-time, as LLM-focused RAG strategies are.

RDM

One other early foray into RAG-augmented picture era is Retrieval-Augmented Diffusion Fashions (RDM), which introduces a semi-parametric strategy to generative picture synthesis. Whereas conventional diffusion fashions retailer all discovered visible information inside their neural community parameters, RDM depends on an exterior picture database:

Retrieved nearest neighbors in an illustrative pseudo-query in RDM*.

Throughout coaching the mannequin retrieves nearest neighbors (visually or semantically comparable pictures) from the exterior database, to information the era course of. This permits the mannequin to situation its outputs on real-world visible situations.

The retrieval course of is powered by CLIP embeddings, designed to pressure the retrieved pictures to share significant similarities with the question, and likewise to offer novel info to enhance era.

This reduces reliance on parameters, facilitating smaller fashions that obtain aggressive outcomes with out the necessity for intensive coaching datasets.

The RDM strategy helps post-hoc modifications: researchers can swap out the database at inference time, permitting for zero-shot adaptation to new kinds, domains, and even fully totally different duties comparable to stylization or class-conditional synthesis.

Within the decrease rows, we see the closest neighbors drawn into the diffusion course of in RDM*.

A key benefit of RDM is its skill to enhance picture era with out retraining the mannequin. By merely altering the retrieval database, the mannequin can generalize to new ideas it was by no means explicitly educated on. That is notably helpful for functions the place area shifts happen, comparable to producing medical imagery primarily based on evolving datasets, or adapting text-to-image fashions for inventive functions.

Negatively, retrieval-based strategies of this sort rely upon the standard and relevance of the exterior database, which makes information curation an essential think about reaching high-quality generations; and this strategy stays removed from a picture synthesis equal of the sort of RAG-based interactions typical in business LLMs.

ReMoDiffuse

ReMoDiffuse is a retrieval-augmented movement diffusion mannequin designed for 3D human movement era. In contrast to conventional movement era fashions that rely purely on discovered representations, ReMoDiffuse retrieves related movement samples from a big movement dataset and integrates them into the denoising course of, in a schema just like RDM (see above).

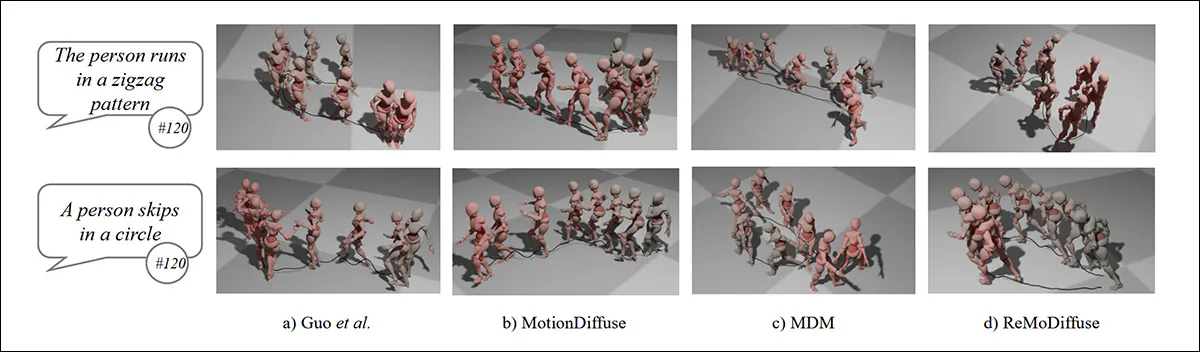

Comparability of RAG-augmented ReMoDiffuse (right-most) to prior strategies. Supply: https://arxiv.org/pdf/2304.01116

This permits the mannequin to generate movement sequences designed to be extra pure and numerous, in addition to semantically devoted to the person’s textual content prompts.

ReMoDiffuse makes use of an revolutionary hybrid retrieval mechanism, which selects movement sequences primarily based on each semantic and kinematic similarities, with the intention of making certain that the retrieved motions are usually not simply thematically related but additionally bodily believable when built-in into the brand new era.

The mannequin then refines these retrieved samples utilizing a Semantics-Modulated Transformer, which selectively incorporates information from the retrieved motions whereas sustaining the attribute qualities of the generated sequence:

Schema for ReMoDiffuse’s pipeline.

The venture’s Situation Combination approach enhances the mannequin’s skill to generalize throughout totally different prompts and retrieval situations, balancing retrieved movement samples with textual content prompts throughout era, and adjusting how a lot weight every supply will get at every step.

This may also help forestall unrealistic or repetitive outputs, even for uncommon prompts. It additionally addresses the scale sensitivity problem that usually arises within the classifier-free steering strategies generally utilized in diffusion fashions.

RA-CM3

Stanford’s 2023 paper Retrieval-Augmented Multimodal Language Modeling (RA-CM3) permits the system to entry real-world info at inference time:

Stanford’s Retrieval-Augmented Multimodal Language Modeling (RA-CM3) mannequin makes use of internet-retrieved pictures to reinforce the era course of, however stays a prototype with out public entry. Supply: https://cs.stanford.edu/~myasu/information/RACM3_slides.pdf

RA-CM3 integrates retrieved textual content and pictures into the era pipeline, enhancing each text-to-image and image-to-text synthesis. Utilizing CLIP for retrieval and a Transformer because the generator, the mannequin refers to pertinent multimodal paperwork earlier than composing an output.

Benchmarks on MS-COCO present notable enhancements over DALL-E and comparable techniques, reaching a 12-point Fréchet Inception Distance (FID) discount, with far decrease computational price.

Nevertheless, as with different retrieval-augmented approaches, RA-CM3 doesn’t seamlessly internalize its retrieved information. Relatively, it superimposes new information towards its pre-trained community, very like an LLM augmenting responses with search outcomes. Whereas this methodology can enhance factual accuracy, it doesn’t exchange the necessity for coaching updates in domains the place deep synthesis is required.

Moreover, a sensible implementation of this technique doesn’t seem to have been launched, even to an API-based platform.

RealRAG

A new launch from China, and the one which has prompted this have a look at RAG-augmented generative picture techniques, is known as Retrieval-Augmented Practical Picture Technology (RealRAG).

Exterior pictures drawn into RealRAG (decrease center). Supply: https://arxiv.o7rg/pdf/2502.00848

RealRAG retrieves precise pictures of related objects from a database curated from publicly accessible datasets comparable to ImageNet, Stanford Vehicles, Stanford Canine, and Oxford Flowers. It then integrates the retrieved pictures into the era course of, addressing information gaps within the mannequin.

A key part of RealRAG is self-reflective contrastive studying, which trains a retrieval mannequin to seek out informative reference pictures, fairly than simply deciding on visually comparable ones.

The authors state:

‘Our key insight is to train a retriever that retrieves images staying off the generation space of the generator, yet closing to the representation of text prompts.

‘To this [end], we first generate images from the given text prompts and then utilize the generated images as queries to retrieve the most relevant images in the real-object-based database. These most relevant images are utilized as reflective negatives.’

This strategy ensures that the retrieved pictures contribute lacking information to the era course of, fairly than reinforcing present biases within the mannequin.

Left-most, the retrieved reference picture; middle, with out RAG; rightmost, with the usage of the retrieved picture.

Nevertheless, the reliance on retrieval high quality and database protection implies that its effectiveness can fluctuate relying on the supply of high-quality references. If a related picture doesn’t exist within the dataset, the mannequin should still wrestle with unfamiliar ideas.

RealRAG is a really modular structure, providing compatibility with a number of different generative architectures, together with U-Internet-based, DiT-based, and autoregressive fashions.

Typically the retrieving and processing of exterior pictures provides computational overhead, and the system’s efficiency is dependent upon how properly the retrieval mechanism generalizes throughout totally different duties and datasets.

Conclusion

It is a consultant fairly than exhaustive overview of image-retrieving multimodal generative techniques. Some techniques of this kind use retrieval solely to enhance imaginative and prescient understanding or dataset curation, amongst different numerous motives, fairly than looking for to generate pictures. One instance is Web Explorer.

Lots of the different RAG-integrated tasks within the literature stay unreleased. Prototypes, with solely revealed analysis, embody Re-Imagen, which – regardless of its provenance from Google – can solely entry pictures from an area customized database.

Additionally, In November 2024, Baidu introduced Picture-Based mostly Retrieval-Augmented Technology (iRAG), a brand new platform that makes use of retrieved pictures ‘from a database’. Although iRAG is reportedly accessible on the Ernie platform, there appear to be no additional particulars about this retrieval course of, which seems to depend on a native database (i.e., native to the service and never immediately accessible to the person).

Additional, the 2024 paper Unified Textual content-to-Picture Technology and Retrieval presents yet one more RAG-based methodology of utilizing exterior pictures to reinforce outcomes at era time – once more, from an area database fairly than from advert hoc web sources.

Pleasure round RAG-based augmentation in picture era is more likely to deal with techniques that may incorporate internet-sourced or user-uploaded pictures immediately into the generative course of, and which permit customers to take part within the selections or sources of pictures.

Nevertheless, it is a vital problem for at the very least two causes; firstly, as a result of the effectiveness of such techniques often is dependent upon deeply built-in relationships shaped throughout a resource-intensive coaching course of; and secondly, as a result of issues over security, legality, and copyright restrictions, as famous earlier, make this an unlikely function for an API-driven net service, and for business deployment on the whole.

* Supply: https://proceedings.neurips.cc/paper_files/paper/2022/file/62868cc2fc1eb5cdf321d05b4b88510c-Paper-Convention.pdf

First revealed Tuesday, February 4, 2025