The next essay is reprinted with permission from The Dialog, a web based publication masking the most recent analysis.

We’re more and more conscious of how misinformation can affect elections. About 73% of Individuals report seeing deceptive election information, and about half battle to discern what’s true or false.

With regards to misinformation, “going viral” seems to be greater than a easy catchphrase. Scientists have discovered an in depth analogy between the unfold of misinformation and the unfold of viruses. The truth is, how misinformation will get round might be successfully described utilizing mathematical fashions designed to simulate the unfold of pathogens.

On supporting science journalism

In case you’re having fun with this text, think about supporting our award-winning journalism by subscribing. By buying a subscription you’re serving to to make sure the way forward for impactful tales in regards to the discoveries and concepts shaping our world at this time.

Considerations about misinformation are extensively held, with a latest UN survey suggesting that 85% of individuals worldwide are fearful about it.

These issues are effectively based. International disinformation has grown in sophistication and scope for the reason that 2016 US election. The 2024 election cycle has seen harmful conspiracy theories about “weather manipulation” undermining correct administration of hurricanes, faux information about immigrants consuming pets inciting violence towards the Haitian group, and deceptive election conspiracy theories amplified by the world’s richest man, Elon Musk.

Latest research have employed mathematical fashions drawn from epidemiology (the examine of how ailments happen within the inhabitants and why). These fashions have been initially developed to review the unfold of viruses, however might be successfully used to review the diffusion of misinformation throughout social networks.

One class of epidemiological fashions that works for misinformation is named the susceptible-infectious-recovered (SIR) mannequin. These simulate the dynamics between prone (S), contaminated (I), and recovered or resistant people (R).

These fashions are generated from a collection of differential equations (which assist mathematicians perceive charges of change) and readily apply to the unfold of misinformation. As an illustration, on social media, false data is propagated from particular person to particular person, a few of whom turn out to be contaminated, a few of whom stay immune. Others function asymptomatic vectors (carriers of illness), spreading misinformation with out figuring out or being adversely affected by it.

These fashions are extremely helpful as a result of they permit us to predict and simulate inhabitants dynamics and to provide you with measures corresponding to the essential copy (R0) quantity – the common variety of instances generated by an “infected” particular person.

Because of this, there was rising curiosity in making use of such epidemiological approaches to our data ecosystem. Most social media platforms have an estimated R0 higher than 1, indicating that the platforms have potential for the epidemic-like unfold of misinformation.

Searching for options

Mathematical modelling usually both includes what’s known as phenomenological analysis (the place researchers describe noticed patterns) or mechanistic work (which includes making predictions based mostly on recognized relationships). These fashions are particularly helpful as a result of they permit us to discover how attainable interventions might assist scale back the unfold of misinformation on social networks.

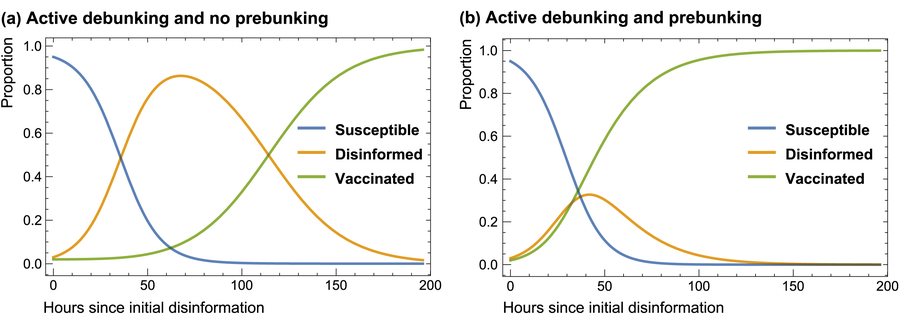

We will illustrate this fundamental course of with a easy illustrative mannequin proven within the graph beneath, which permits us to discover how a system would possibly evolve below a wide range of hypothetical assumptions, which may then be verified.

Outstanding social media figures with giant followings can turn out to be “superspreaders” of election disinformation, blasting falsehoods to doubtlessly a whole lot of thousands and thousands of folks. This displays the present scenario the place election officers report being outmatched of their makes an attempt to fact-check minformation.

In our mannequin, if we conservatively assume that folks simply have a ten% likelihood of an infection after publicity, debunking misinformation solely has a small impact, in keeping with research. Underneath the ten% likelihood of an infection situation, the inhabitants contaminated by election misinformation grows quickly (orange line, left panel).

A ‘compartment’ mannequin of disinformation unfold over per week in a cohort of customers, the place disinformation has a ten% likelihood of infecting a prone unvaccinated particular person upon publicity. Debunking is assumed to be 5% efficient. If prebunking is launched and is about twice as efficient as debunking, the dynamics of disinformation an infection change markedly.

Sander van der Linden/Robert David Grimes

Psychological ‘vaccination’

The viral unfold analogy for misinformation is becoming exactly as a result of it permits scientists to simulate methods to counter its unfold. These interventions embody an method known as “psychological inoculation”, also referred to as prebunking.

That is the place researchers preemptively introduce, after which refute, a falsehood so that folks acquire future immunity to misinformation. It’s just like vaccination, the place persons are launched to a (weakened) dose of the virus to prime their immune programs to future publicity.

For instance, a latest examine used AI chatbots to provide you with prebunks towards frequent election fraud myths. This concerned warning folks upfront that political actors would possibly manipulate their opinion with sensational tales, such because the false declare that “massive overnight vote dumps are flipping the election”, together with key tips about find out how to spot such deceptive rumours. These ‘inoculations’ might be built-in into inhabitants fashions of the unfold of misinformation.

You may see in our graph that if prebunking just isn’t employed, it takes for much longer for folks to construct up immunity to misinformation (left panel, orange line). The suitable panel illustrates how, if prebunking is deployed at scale, it will possibly comprise the variety of people who find themselves disinformed (orange line).

The purpose of those fashions is to not make the issue sound scary or counsel that persons are gullible illness vectors. However there may be clear proof that some faux information tales do unfold like a easy contagion, infecting customers instantly.

In the meantime, different tales behave extra like a posh contagion, the place folks require repeated publicity to deceptive sources of data earlier than they turn out to be “infected”.

The truth that particular person susceptibility to misinformation can differ doesn’t detract from the usefulness of approaches drawn from epidemiology. For instance, the fashions might be adjusted relying on how onerous or tough it’s for misinformation to “infect” completely different sub-populations.

Though pondering of individuals on this manner may be psychologically uncomfortable for some, most misinformation is subtle by small numbers of influential superspreaders, simply as occurs with viruses.

Taking an epidemiological method to the examine of pretend information permits us to foretell its unfold and mannequin the effectiveness of interventions corresponding to prebunking.

Some latest work validated the viral method utilizing social media dynamics from the 2020 US presidential election. The examine discovered {that a} mixture of interventions might be efficient in lowering the unfold of misinformation.

Fashions are by no means excellent. But when we wish to cease the unfold of misinformation, we have to perceive it to be able to successfully counter its societal harms.

This text was initially printed on The Dialog. Learn the authentic article.