Picture by Creator

I really imagine that to get employed within the discipline of synthetic intelligence, it’s essential have a robust portfolio. This implies it’s essential present the recruiters that you may construct AI fashions and purposes that remedy real-world issues.

On this weblog, we’ll evaluate 7 AI portfolio tasks that can increase your resume. These tasks include tutorials, supply code, and different supportive supplies that can assist you construct correct AI purposes.

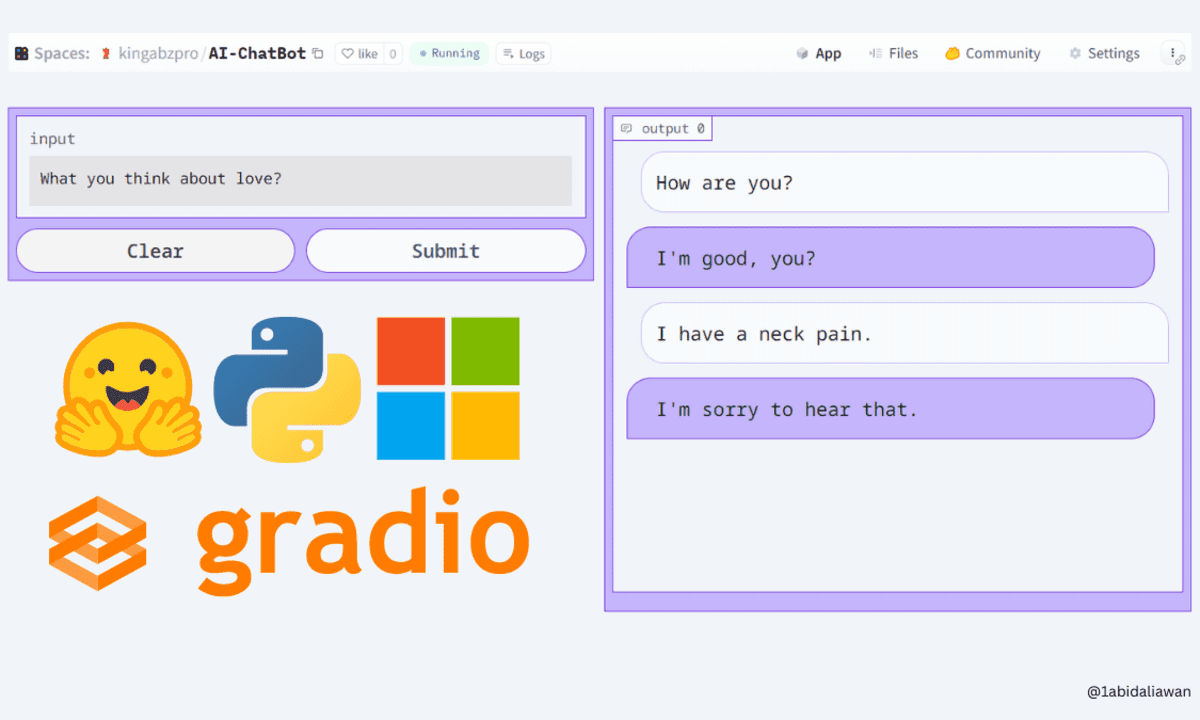

1. Construct and Deploy your Machine Studying Utility in 5 Minutes

Mission hyperlink: Construct AI Chatbot in 5 Minutes with Hugging Face and Gradio

Screenshot from the challenge

On this challenge, you can be constructing a chatbot utility and deploying it on Hugging Face areas. It’s a beginner-friendly AI challenge that requires minimal information of language fashions and Python. First, you’ll be taught numerous elements of the Gradio Python library to construct a chatbot utility, after which you’ll use the Hugging Face ecosystem to load the mannequin and deploy it.

It’s that easy.

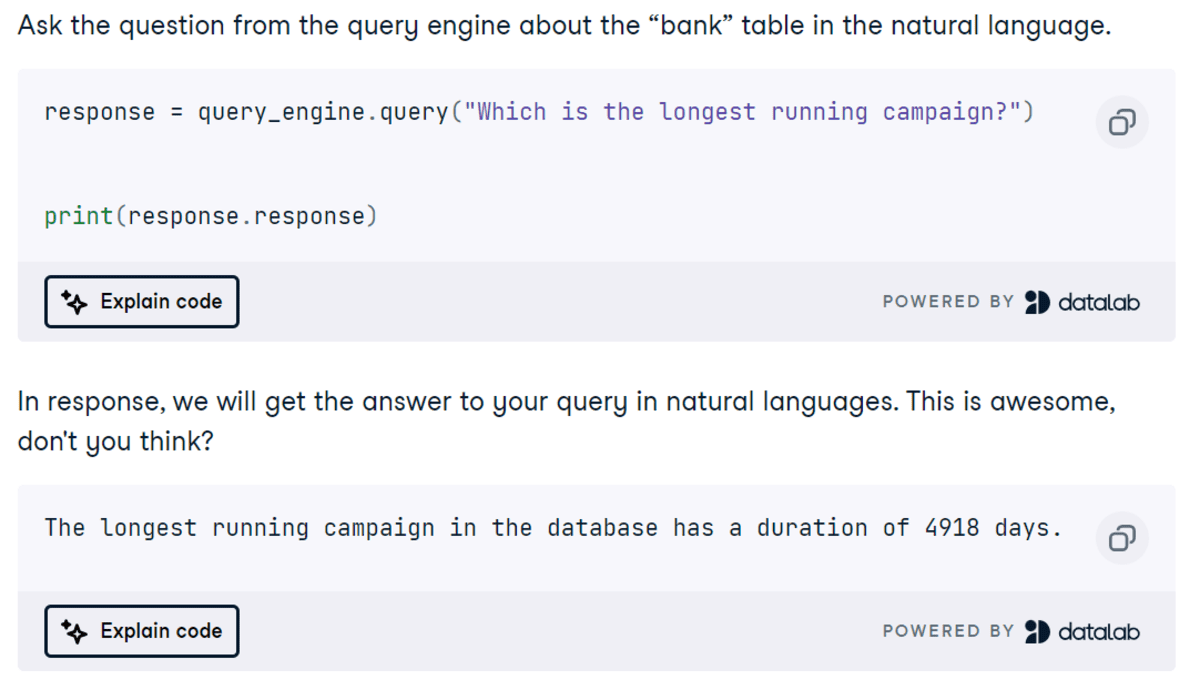

2. Construct AI Tasks utilizing DuckDB: SQL Question Engine

Mission hyperlink: DuckDB Tutorial: Constructing AI Tasks

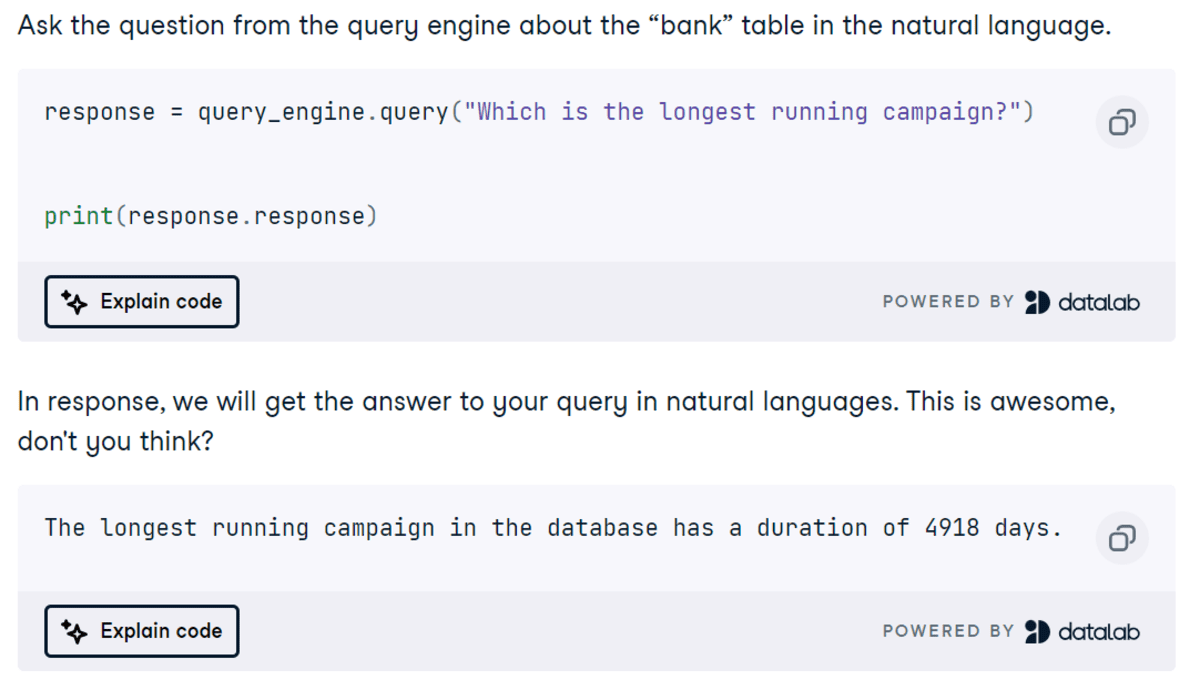

Screenshot from the challenge

On this challenge, you’ll be taught to make use of DuckDB as a vector database for an RAG utility and in addition as an SQL question engine utilizing the LlamaIndex framework. The question will take pure language enter, convert it into SQL, and show the end in pure language. It’s a easy and simple challenge for learners, however earlier than you dive into constructing the AI utility, it’s essential be taught a couple of fundamentals of the DuckDB Python API and the LlamaIndex framework.

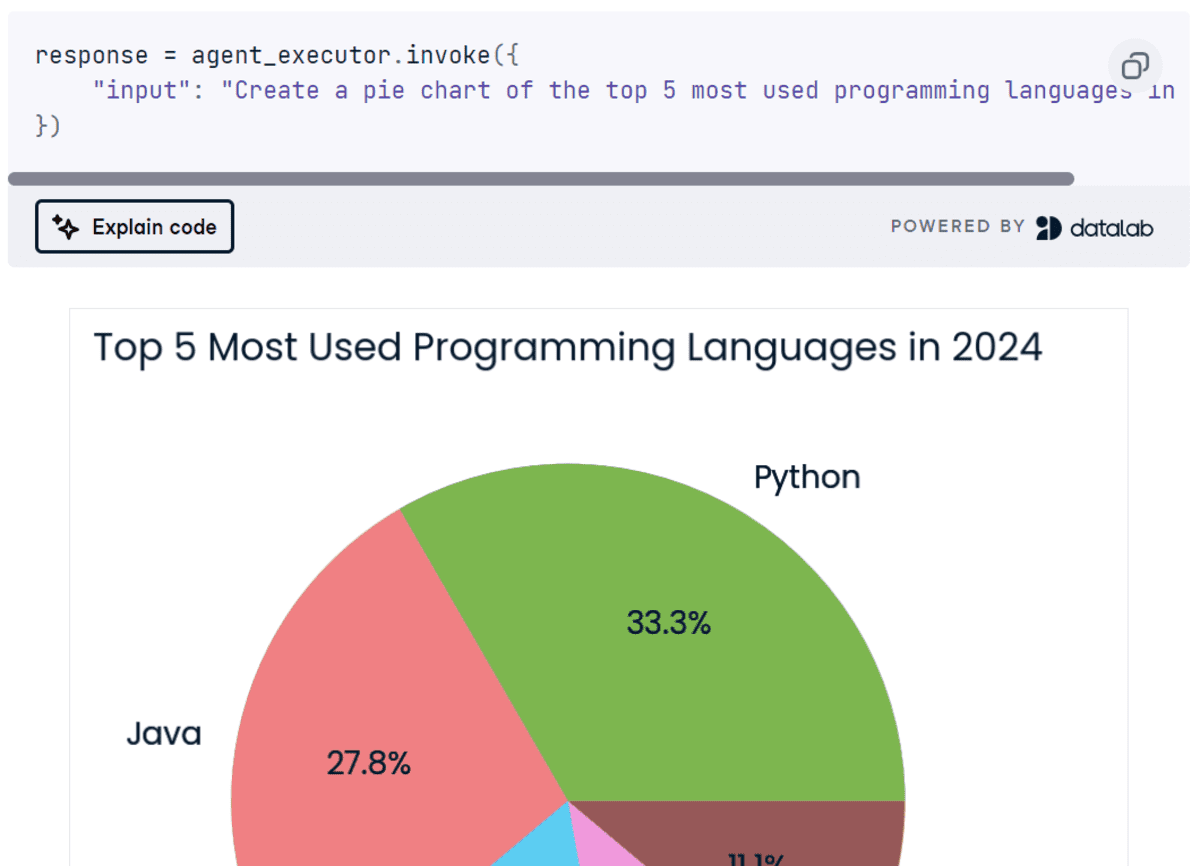

3. Constructing A number of-step AI Agent utilizing the LangChain and Cohere API

Mission hyperlink: Cohere Command R+: A Full Step-by-Step Tutorial

Screenshot from the challenge

Cohere API is healthier than OpenAI API by way of performance for growing AI purposes. On this challenge, we’ll discover the varied options of Cohere API and be taught to create a multi-step AI agent utilizing the LangChain ecosystem and the Command R+ mannequin. This AI utility will take the consumer’s question, search the online utilizing the Tavily API, generate Python code, execute the code utilizing Python REPL, after which return the visualization requested by the consumer. That is an intermediate-level challenge for people with fundamental information and fascinated with constructing superior AI purposes utilizing the LangChain framework.

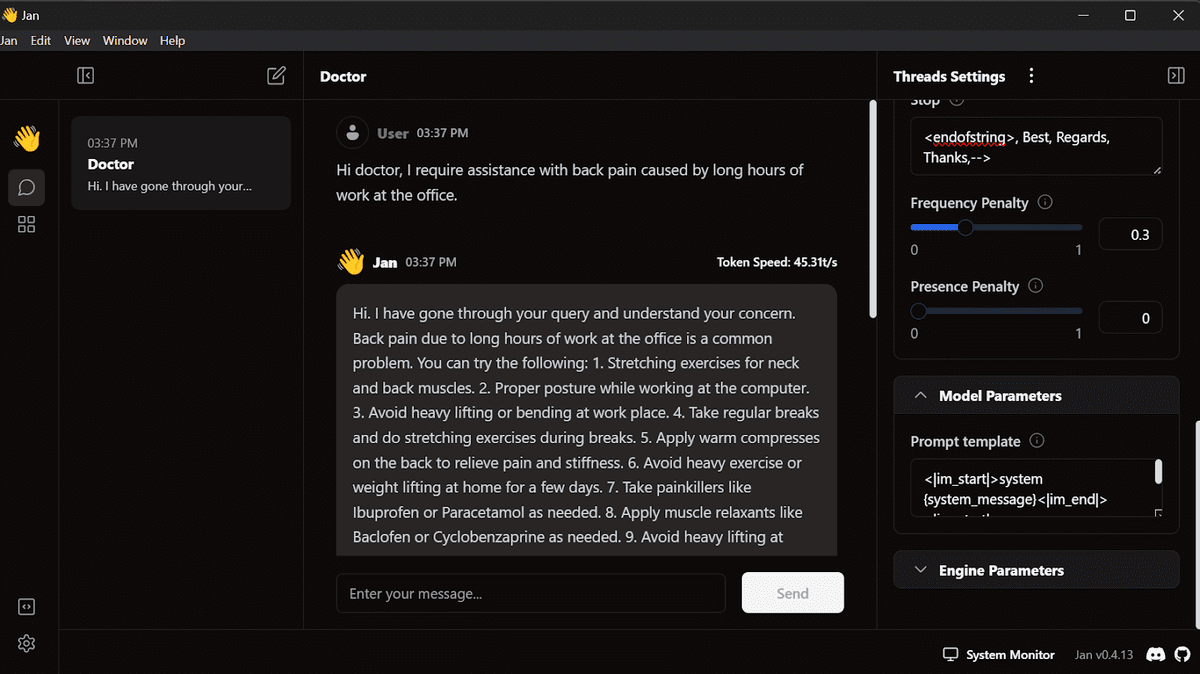

4. Nice-Tuning Llama 3 and Utilizing It Regionally

Mission hyperlink: Nice-Tuning Llama 3 and Utilizing It Regionally: A Step-by-Step Information | DataCamp

Picture from the challenge

A well-liked challenge on DataCamp that can allow you to fine-tune any mannequin utilizing free sources and convert the mannequin to Llama.cpp format in order that it may be used regionally in your laptop computer with out the web. You’ll first be taught to fine-tune the Llama-3 mannequin on a medical dataset, then merge the adapter with the bottom mannequin and push the total mannequin to the Hugging Face Hub. After that, convert the mannequin recordsdata into the Llama.cpp GGUF format, quantize the GGUF mannequin and push the file to Hugging Face Hub. Lastly, use the fine-tuned mannequin regionally with the Jan utility.

5. Multilingual Automated Speech Recognition

Mannequin Repository: kingabzpro/wav2vec2-large-xls-r-300m-Urdu

Code Repository: kingabzpro/Urdu-ASR-SOTA

Tutorial Hyperlink: Nice-Tune XLSR-Wav2Vec2 for low-resource ASR with 🤗 Transformers

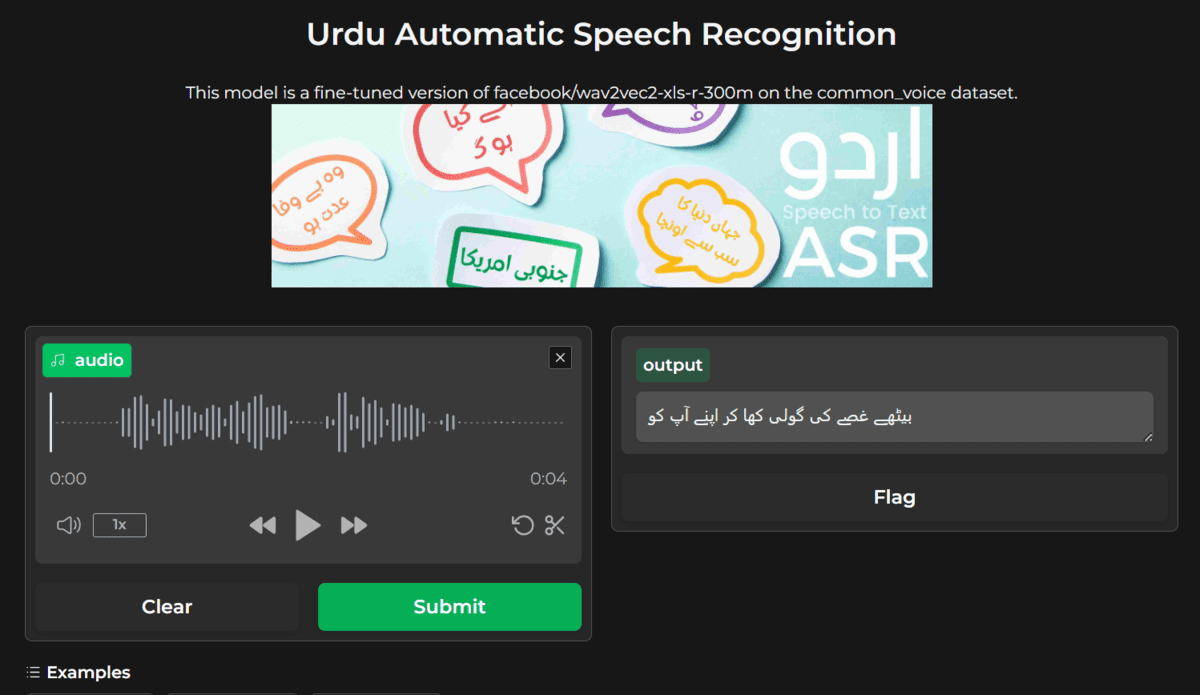

Screenshot from kingabzpro/wav2vec2-large-xls-r-300m-Urdu

My hottest challenge ever! It will get nearly half one million downloads each month. I fine-tuned the Wave2Vec2 Giant mannequin on an Urdu dataset utilizing the Transformer library. After that, I improved the outcomes of the generated output by integrating the language mannequin.

Screenshot from Urdu ASR SOTA – a Hugging Face Area by kingabzpro

On this challenge, you’ll fine-tune a speech recognition mannequin in your most well-liked language and combine it with a language mannequin to enhance its efficiency. After that, you’ll use Gradio to construct an AI utility and deploy it to the Hugging Face server. Nice-tuning is a difficult process that requires studying the fundamentals, cleansing the audio and textual content dataset, and optimizing the mannequin coaching.

6. Constructing CI/CD Workflows for Machine Studying Operations

Mission hyperlink: A Newbie’s Information to CI/CD for Machine Studying | DataCamp

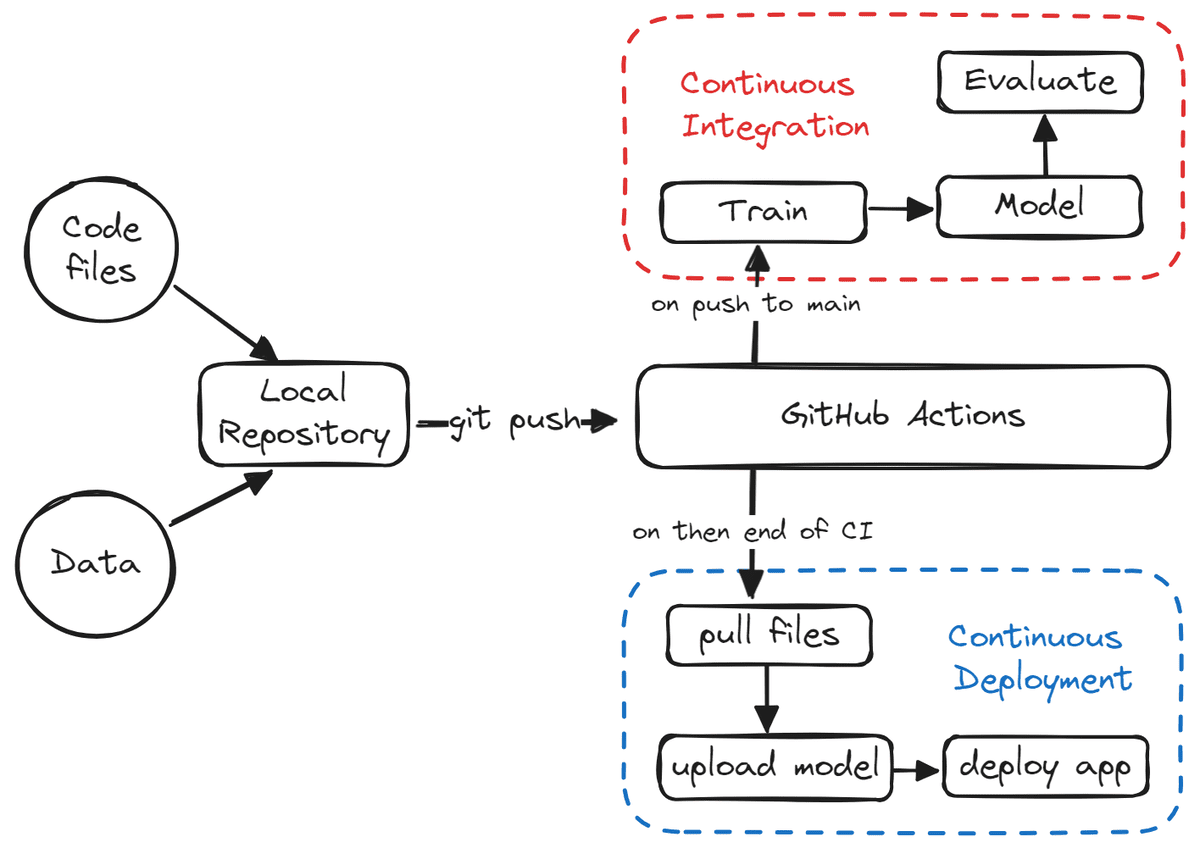

Picture from the challenge

One other well-liked challenge on GitHub. It entails constructing a CI/CD pipeline or machine studying operations. On this challenge, you’ll find out about machine studying challenge templates and automate the processes of mannequin coaching, analysis, and deployment. You’ll find out about MakeFile, GitHub Actions, Gradio, Hugging Face, GitHub secrets and techniques, CML actions, and numerous Git operations.

In the end, you’ll construct end-to-end machine studying pipelines that can run when new information is pushed or code is up to date. It should use new information to retrain the mannequin, generate mannequin evaluations, pull the skilled mannequin, and deploy it on the server. It’s a absolutely automated system that generates logs at each step.

7. Nice-tuning Secure Diffusion XL with DreamBooth and LoRA

Mission hyperlink: Nice-tuning Secure Diffusion XL with DreamBooth and LoRA | DataCamp

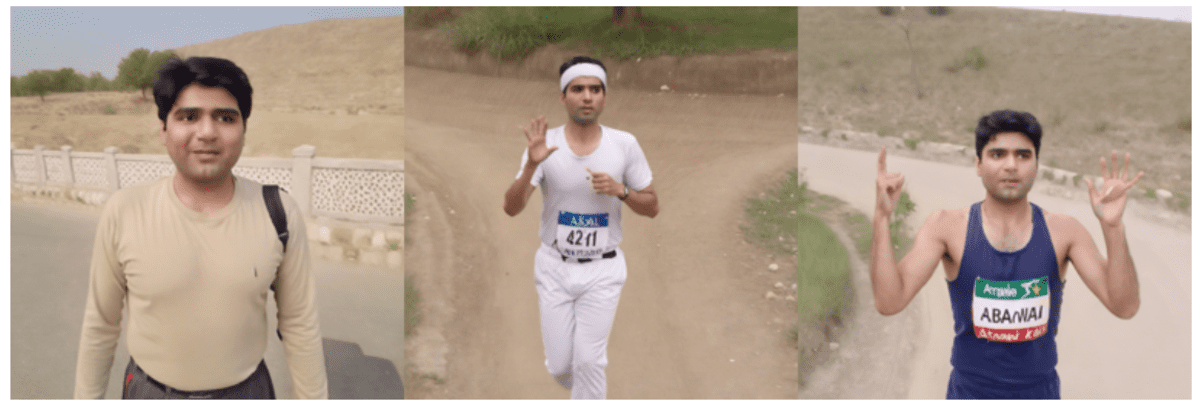

Picture from the challenge

We have now discovered about fine-tuning giant language fashions, however now we’ll fine-tune a Generative AI mannequin utilizing private images. Nice-tuning Secure Diffusion XL requires just a few pictures and, in consequence, you will get optimum outcomes, as proven above.

On this challenge, you’ll first find out about Secure Diffusion XL after which fine-tune it on a brand new dataset utilizing Hugging Face AutoTrain Advance, DreamBooth, and LoRA. You may both use Kaggle totally free GPUs or Google Colab. It comes with a information that can assist you each step of the way in which.

Conclusion

All the tasks talked about on this weblog have been constructed by me. I made positive to incorporate a information, supply code, and different supporting supplies.

Engaged on these tasks gives you beneficial expertise and allow you to construct a robust portfolio, which might enhance your possibilities of securing your dream job. I extremely advocate everybody to doc their tasks on GitHub and Medium, after which share them on social media to draw extra consideration. Preserve working and preserve constructing; these experiences will also be added to your resume as an actual expertise.

Abid Ali Awan (@1abidaliawan) is an authorized information scientist skilled who loves constructing machine studying fashions. At present, he’s specializing in content material creation and writing technical blogs on machine studying and information science applied sciences. Abid holds a Grasp’s diploma in know-how administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college kids combating psychological sickness.